On customer feedback

Henry Ford of the Ford Motors Company famously said: "If I had asked my customers what they wanted, they would have asked me for a faster horse". Well, actually he didn't say that - but it's a great story nonetheless to kickstart an important conversation: when and how to listen to customers.

Let's get the obvious out of the way: understanding the sector you operate into and the customers you're selling to is an essential skill as a PM: without it, originating new products and product features becomes a game of luck rather than a repeatable challenge.

Understanding customers generally is achieved in one of three ways: by passively acquiring knowledge and experience of your sector, by looking at the hard data (either existing product metrics, or external data for prospective products). or by talking to them directly (informally, through structured customer interviews, or through surveys),

The first two are pretty self-explanatory, and there is plenty of information online on how to tackle metric-driven product development. Today I want to focus on the last notion: the idea that you can distill product insights by listening to your customers telling you what they want.

This is possible - at least in theory - but there's three important caveats I've seen PMs fall into over and over again:

🃏 Selection Bias

(Self-)selection bias is a very common concept in experimental statistics: it's the assumption that the group of volunteers giving you feedback is actually significantly different from the general population that your trial (on in our case, our product) targets.

If you like economics and want to see a mathematical outline of what selection bias brings to homogeneous populations, you can take a look at Roy's Model: a classical toy model with two outcomes - in our case "give feedback" and "don't give feedback" - who are mathematically demonstrated to be solely driven by how strongly the customer feels about our product, rather than about how good the product itself is.

What this means in a practical context, is that only the customers who are truly polarized about the product will be bothered enough to let you know, either in surveys or social media. This polarization will be exacerbated either in the positive or negative direction depending on the feedback channel: intuitively, people will be more negative on anonymous social media, and more forgiving in small-group, face to face interviews. More importantly, smaller grievances about the product will be easily missed (especially if people have larger critique or praise), which paradoxically makes customer feedback very ineffective in creating a polished product.

The largest problem that selection bias brings, however, is that customers will naturally focus on giving feedback about the product they have experienced, rather than the product you're aiming to build. This makes it very difficult to focus on the future possibilities and the roadmap ahead: especially during early stages of product development, listening to too much feedback will lead your team into an endless optimization loop, where the product is endlessly scrutinized, but does not move organically to the next step.

I know this is a handful, so let me recap: selection bias means your customers will 'self-select', and the majority of feedback will come the customers who feel the strongest about your product (love/hate). This means:

- feedback will be overly negative or overly positive (depending on the feedback medium) compared to the actual state of your product

- small issues will be missed: it's likely you'll hear about the glaring flaws you already knew of, rather than the small grievances you missed

- especially for early feedback, since the customer only sees your current version of the product - rather than the final one - you risk getting stuck in an optimization loop, instead of building towards v 1.0

🙉 Selective listening

This one's short and sweet: as a PM, you probably have a strong vision of your product, and talk on the regular with other teams to sell your vision and get people onboard.

It is very tempting then to take all of your customers' feedback, skim it, and cherry pick the items that back your vision up. "You see guys? I thought we should do A rather than B, and the majority of customers agree".

The majority? That's very hard to conclude from a small sample of customer feedback - especially given the previously mentioned self-selection bias. Yet, the temptation will always be there. Don't be that guy/girl: you should be extra careful when listening to customer feedback to record every single point of feedback - even those that don't support your vision of the product.

Especially for doomed, cursed products that should never have been developed, customer feedback is a powerful tool to understand when it's time to pull the plug. I would even argue feedback is at its most powerful when it allows you to see that there's just no way your product can do what you dreamed of.

If you catch your colleagues doing 'selective listening' of their customers' feedback, call them out on it. Feedback should never be weaponized, and it should never be used as a validation of your pre-conceived idea of the product. If anything, it should be used as a safeguard to avoid building useless things.

🤖 Automate your feedback review

This one is very personal. I see plenty of PMs having great results with parsing complex survey and market overviews into planning documents and ideas. Some people just have very good empathy skills with their customers and are able to distill many hours of interviews down to actionable product development milestones.

But I can't, so I let computers do the hard work of parsing my customer feedback for me - in three easy steps.

First, I collect all of the general customer feedback I have from my social and marketing teams. In larger organizations, there's probably dedicated teams that parse all of this data already and put it in a nicely formatted database.

If not, you can probably start by scraping some comments (preferably automatically) from Twitter and other social networks. Even better, just organize a session with your customers and have the write out all of the feedback they have about your product.

Since you don't need your customers to help you put together a full narrative, but rather you just want them to jot down all of their thoughts in an unstructured manner, the chances of them stopping at the most obvious flaws - or of them being nice to you (see the previous points) will be much lower.

Second, you can take all of this data and categorize it. There are some crazy souls out there doing this by hand or with Excel - please don't. And don't use heuristics or keywords (like 'good' or 'bad') to assign scores: that's primitive and inaccurate.

Instead, you can use sentiment analysis to give a positivity score to each line of feedback - even with no-code solutions like Google Sheets. Me, I generally spin up a Jupyter Notebook with Spacy, use TextBlob for sentiment analysis (which works straight out of the box), assign some categories manually and then use a Spacy classifier to bucket the remaining items of customer's feedback into the categories I defined, automatically.

Third I distill this data into N buckets, that represent all of the strengths and all of the weaknesses of my product. I compare this ranking with my personal ranking of what my strength and weaknesses are for my vision of the product: if my view and my customer's view match, then we're on the right track. If there are large discrepancies, I go back to the drawing board since it's clear I'm missing something.

Especially if you're working with social media feedback of an evolving product, or NPS comments from your customers, you can keep doing this on a rolling basis once you've set up a data pipeline. This is truly a superpower that helps you steer products in the right direction without wasting weeks in survey feedback planning and collection.

Of course, if you don't feel comfortable with this flow and have no analyst at your company that can help you out in setting this up, you can always invest into a license for customer feedback analysis tools like Chattermill, Retently or AskNicely (but there's plenty more, and I have no preference - they all do the same thing).

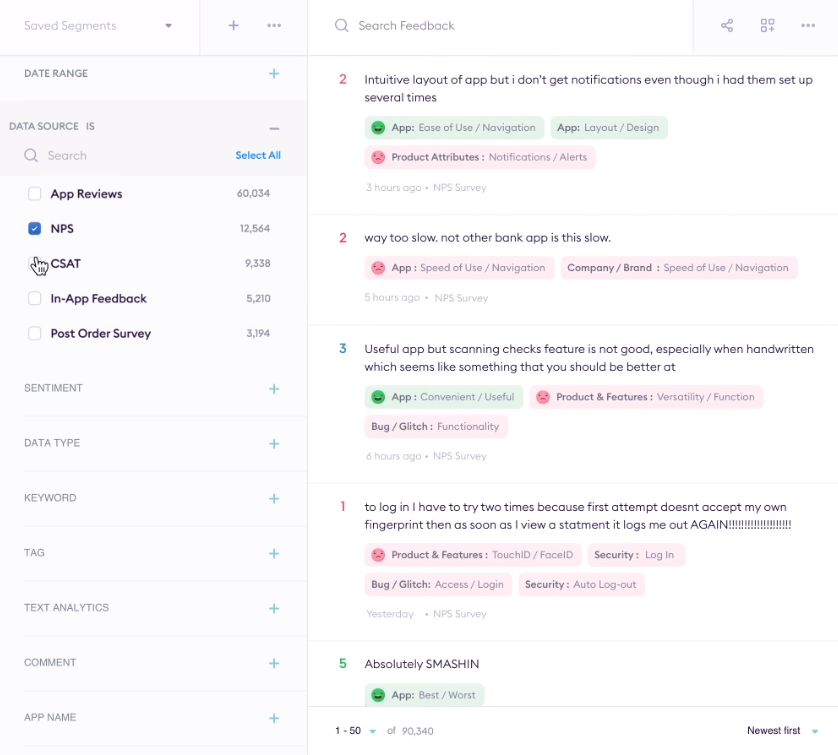

For a monthly fee, you will get a nice interface like this:

That will help you quickly sort out your customer's feedback into valuable buckets that can inspire and drive your product growth.

♻️ Wrapping up

To recap, I think it is crucial for good product managers to listen to their customers to understand how their product is faring. You can do so either by analyzing product metrics, by building direct experience in a given sector, or by directing asking for customer feedback.

If you go down this route, though, you'll need to make sure:

- 🃏 that self-selection bias doesn't give you extremely polarized customers with feedback that lacks details and leads you to premature optimization

- 🙉 that you don't selectively listen only to the feedback that validates your product vision: on the contrary, use the feedback to know when to pull the plug

Finally, it is not necessary to spend days or weeks trawling through customer feedback (or limit the amount of feedback collected): you can use 🤖 your computer to automatically score and classify the feedback you received - using either simple no-code methods like google sheets with natural language API, open source libraries like Spacy and TextBlob, or dedicated analysis tools like Chattermill.

Knowing how to collect and use customer feedback is a superpower. Hope this post helps you in leveraging it for your next product!